NeRS Paper Figures — Video Versions

Figure 1

| Nearest Neigh. Input | Initial Car Mesh | Output Radiance | Predicted Texture | Illum. of Mean Texture |

| NN Input | Output | Interpolation | NN Input | Output | Interpolation | NN Input | Output | Interpolation |

Figure 5

| Nearest Neighbor Input | Initial Mesh | NeRS (Ours) | Shape Interpolation |

Figure 6

| NN Training View | NeRS | IDR | NeRF* | MetaNeRF | MetaNeRF-ft |

| NN Training View | NeRS | IDR | NeRF* | NeRS w/ NeRF-style View-dep. |

Figure 7

| NN Training View | NeRS | IDR | NeRF* | NeRS w/ NeRF-style View-dep. |

Figure 8

| NN Training View | NeRS | Illumin. of Mean Texture | NN Training View | NeRS | Illumin. of Mean Texture |

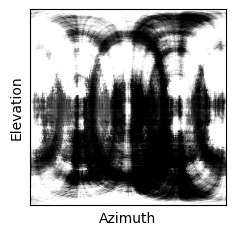

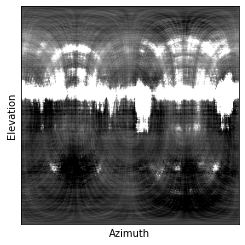

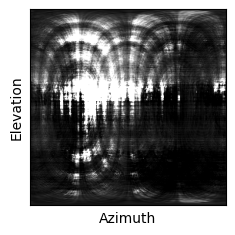

Figure 10

| Nearest Neighbor Training View | NeRS no View-Dep. | NeRS (Ours) | Illumination of Mean Texture | Environment Map |

|

||||

|

||||

|

||||

Figure 11

| Nearest Neighbor Training View | Mask Carving using Initial Cameras | Mask Carving using Pre-trained Cameras | Mask Carving using Optimized Cameras | Learned Shape Model | NeRS (Ours) |